Clarification: Snappy and Flatpak

Recently, I posted a piece about distributions consolidating around a consistent app store. In it I mentioned Flatpak as a potential component and some people wondered why I didn’t recommend Snappy, particularly due to my Canonical heritage.

To be clear (and to clear up my in-articulation): I am a fan of both Snappy and Flatpak: they are both important technologies solving important problems and they are both driven by great teams. To be frank, my main interest and focus in my post was the notion of a consolidated app store platform as opposed to what the specific individual components would be (other people can make a better judgement call on that). Thus, please don’t read my single-line mention of Flatpak as any criticism of Snappy. I realize that this may have been misconstrued as me suggesting that Snappy is somehow not up to the job, which was absolutely not my intent.

Part of the reason I mentioned Flatpak is that I feel there is a natural center of gravity forming around the GNOME Shell and platform, which many distros are shipping. Within the context of that platform I have seen Flatpak commonly mentioned as a component, hence why I mentioned it. Of course, there is no reason why Snappy couldn’t be that component too, and the Snappy team have been doing great work. I was also under the impression (entirely incorrectly) that Snappy is focusing more on the cloud/server market. It has become clear that the desktop is very much within the focus and domain of Snappy, and I apologize for the confusion.

So, to clear up any potential confusion (I can be an inarticulate clod at times), I am a big fan of Snappy, big fan of Flatpak, and an even bigger fan of a consolidated app store that multiple distros use.? My view is simple: competition is healthy, and we have two great projects and teams vying to make app installation and management on Linux easier. Viva la desktop!

Consolidating the Linux Desktop App Story: An Idea

When I joined Canonical in 2006, the Linux desktop world operated in a very upstream way. All distributions used the Linux kernel, all used X, and the majority shipped either GNOME, KDE, or both.

The following years mixed things up a little. As various companies pushed for consumer-grade Linux-based platforms (e.g. Ubuntu, Fedora, Elementary, Android etc), the components in a typical Linux platform diversified. Unity, Mir, Wayland, Cinnamon, GNOME Shell, Pantheon, Plasma, Flatpak, Snappy, and others entered the fray. This was a period of innovation, but also endless levels of consternation: people bickering left, right, and center, about which of these components were the best choices.

This is normal in technology, both the innovation and the flapping of feathers in blog posts and forums. As is also normal, when the dust settled a natural set of norms started to take shape.

Today, I believe we face an opportunity to consolidate around some key components, not just to go faster, but to also avoid the mistakes of the past.

App Stores are Hard

Historically, one of the difficulties with shipping a Linux desktop was differentiation.

I remember this vividly in my days at Canonical. People always praised Ubuntu for two main reasons: (1) you could get the exciting new technology in Ubuntu first, and (2) shit just worked.

While the latter was and is always key, the former was always going to have a short shelf life. While enthusiasts are willing to upgrade their desktops every six months, businesses and non-nerds are not, so Ubuntu needed to have a way to differentiate.

The result of course was Unity, Scopes, and the Ubuntu Software Center (and associated developer program). Here’s the thing though: building an app store is relatively simple, but building the ecosystem which makes developers want to get their applications in that store is really hard.

Most app developers and ISVs don’t care about your product, they care about the size of the market you can expose their product to. They also care about a logical revenue model and simplicity in delivering their app on your store: they want to make money without jumping through hoops.

Building all of this requires huge amounts of work, including engineering, developer engagement, on-boarding, and business development. We took a pretty good swing at it in Ubuntu and it was hard, and Microsoft poured millions of dollars into it for their phone and even that didn’t work.

The moral of this story is that differentiation is important, but we have to be realistic in what it takes to differentiate at this level. I think if we want the Linux desktop to grow, we have to strike the right balance between differentiation (giving people a reason to use your product) and consistency (not re-inventing the wheel).

Now, critics will say that they knew this all the time and Ubuntu should have never focused on Unity, Scopes etc. I don’t believe it is as clear cut as those critics might think: few Linux platforms (if any?) had taken a series whack at building a consumer grade app and developer experience. We tried, it was not successful, and instead of digging up the past I would rather ensure we can inform the future.

The good news is that I think we have a better opportunity for this than ever before.

Building a Standard Linux Desktop Core

What I want to see is that the various distributions put at the core of their platform a central app repository that is based on Flatpak, complete with the ecosystem pieces (e.g. an interface for developers to upload their apps, scripts for scanning packages for security issues, tools to edit app store pages, a payments mechanism to support the purchasing of apps etc).

All distributions would then use this platform instead of trying to reinvent the wheel, but they could customize their own app store experience and filter apps in different ways. For example, a GNOME-based distribution may only want to pull in GTK-based apps, another distro may only want want to support free software apps, another distro may only want apps written in a certain language. This way, no-one is forced into the same policy about what apps they ship: the shared app platform is a big bucket that you can pull the right pieces from.

This would have a number of benefits:

- We consolidate resources around a central platform.

- From my experience, app developers and ISVs are freaked out about the Linux world due to all the different platforms. This would provide a singular way of addressing Linux as a platform.

- We provide a single set of usage data to app developers and ISVs (instead of an individual distro’s stats for downloads, we can show all distros that use the system for download stats). This is an important marketing benefit.

- Better security: updates can be delivered to multiple different distributions.

Now, of course, this will require some collaboration and there will be some elephants in the room to figure out.

One major elephant will be whether this platform supports non-free software. To be completely blunt, unless we support non-free apps (e.g. Slack, Steam, Photoshop, Spotify etc), it will never break into the wider consumer market. People judge their platforms based upon whether they can use the things they like and irrespective of the rights and wrongs in the world, most people depend on or want non-free apps. Of course, I wish we could have a free software and open source technology world like the rest of you, but I think we need to be realistic.

This wouldn’t matter though: distros with a focus on free software can merely filter only the apps that are free software for their users. For another distro that is open to non-free apps, they can also benefit from the same platform.

This approach will offer huge value for companies investing in the Linux desktop too: reduced engineering costs (and expanded innovation), differentiation in how you present and offer apps, and the benefit of likely more app devs and ISVs wanting to ship apps (thus making the platform more valuable).

A Good Start

The good news is that today I feel we have a bunch of the key pieces in place to support this kind of initiative, including:

- GNOME Software – a simple and powerful store for browsing and installing software.

- Flatpak – Flatpak is a simple and efficient packaging format for delivering applications (I am recommending Flatpak instead of Snappy as Snappy seems to be more focused on the cloud and server side of things these days, and Flatpak isn’t well suited to cloud/server).

- Wayland – Wayland is a modern display server.

I think if we took these pieces, brought them under the banner of something such as FreeDesktop, built support from the various distros (e.g. Ubuntu, Fedora, Endless, Debian, Elementary etc), I think it would be a phenomenally valuable initiative and really optimize the success of the Linux desktop.

I would love to hear your thoughts on this, share them in the comments. Good idea? Bad idea? Somewhere in-between?

UPDATE: It seems I inadvertently left the impression in this post that I was not supporting Snappy as a potential component here. Please see this post for a clarification.

If you thought this was interesting, you might want to Join As a Member. This will ensure you never miss an article, you get access to exclusive member-only content, early-access to new projects and member-only events, and the possibility of winning free 1-on-1 workshops. It is entirely FREE and I will never sell your information or spam you (those people suck). Find out more here.

Open Community Conference: Updates, CFP, Webinar, and Prizes

A little while back I announced that I am starting a new conference called the Open Community Conference in conjunction with my friends at the Linux Foundation.

Put simply: the Open Community Conference provides a raft of presentations, panels, and BoFs with pragmatic guidance for building and engaging productive communities.

While my other event, the Community Leadership Summit provides a set of workshops for community managers to shape community strategy, the Open Community Conference presents easily consumable and applicable best practice for organizations and practitioners. It is an ideal event for those of you who want to learn pragmatic approaches for how to evolve community strategy with your products/services.

I am running the Open Community Conference in two locations this year:

- Los Angeles – 11th -14th September 2017

- Prague – 23rd – 26th October 2017

The Open Community Summit is one of the major events as part of the Open Source Summit in each location.

Open Community Conference America Schedule Published

I am delighted to share that the schedule for the Open Community Conference in Los Angeles is now available here.

Some sessions I am particularly excited about include:

- Aim to Be an Open Source Zero – Guy Martin, Autodesk

- Building Open Source Project Infrastructures – Elizabeth K. Joseph, Mesosphere

- Scaling Open Source – Lessons Learned at the Apache Software Foundation – Phil Steitz, Apache Software Foundation

- So You’ve Decided You Need an Open Source Program Office – Duane O’Brien, PayPal & Nithya Ruff, Comcast

- Why I Forked My Own Project and My Own Company – Frank Karlitschek, ownCloud

- So You Have a Code of Conduct… Now What? – Sarah Sharp, Otter Tech

- Bootstrapping Community – Colin Charles, Percona

- Fora, Q&A, Mailing Lists, Chat…Oh My! – Jeremy Garcia, LinuxQuestions.org / Datadog

- Open Source Licensing 101 – Jim Jagielski, Capital One Selling Open Source, * Keeping Your Soul – Jessica Rose, Crate.io

- Venture Capital Community: Applying Open Source Principles to Disrupt a Traditional Industry – Cory Bolotsky, Underscore VC

There are many more sessions as part of the schedule too, covering a diverse range of areas.

I will also be delivering a keynote and an additional session called Building Predictable Community: Strategy, Incentives, Value, and Psychology.

Webinar: 24th July at 9.30am Pacific

I will also be running a webinar on Monday 24th July 2017 at 9.30am Pacific where I will talk about the conference and answer questions about community strategy.

Also, (and as a sneak peek, it hasn’t been announced yet 😉 ), if you post questions to me on Twitter with the #AskJono hashtag about community strategy, leadership, open source, innersource, or the conference, you can win 3 free tickets to the event (including all the sessions, networking events, and more).

All of the questions will be answered on the webinar.

Go and sign up for the webinar here!

Open Community Conference Europe CFP Closes 8th July

Finally, for the Open Community Conference in Europe, the Call For Papers closes on Sat 8th July 2017 (which is tomorrow as I write this).

If you are interested in sharing your pragmatic experience and recommendations about building powerful, productive, engaged communities, go and submit your your paper here.

UPDATE: the CFP is now closed.

Optimizing On-Ramps, Community Strategy, and On-Boarding

As part of my work, I tend to write a lot of articles, participate in interviews, and various other things. Previously I have not done a very good job at sharing these things on my blog, but as the number of people who subscribe to my posts seems to be growing, I am going to make a point of sharing these pieces here.

So, here are some recent pieces that you might be interested in.

Designing For Participation: Take Your Site’s UX to the Next Level

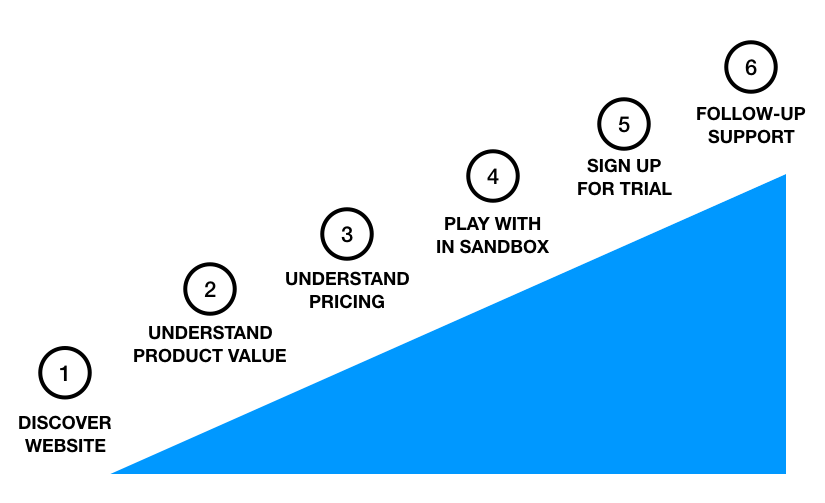

This week a new article I wrote for Velocitize went online. It covers how every website for a product or project can be broken down into an on-ramp that we can optimize how we derive the outcome we want. From the piece:

Fundamentally, websites should (a) deliver information we want the reader to consume, and (b) encourage user behavior we want to see. For example, we might want to show someone our product and then have them sign up for a demo. Or, we might want someone to read and comment on our blog.

First, sit down and think of these desired core outcomes. Now, for each, map out an on-ramp that breaks down how someone would get there.

The piece then walks through a sample on-ramp and how this can be used to break down the experience into pieces we can optimize:

The article also runs through a checklist of recommendations for optimizing a website, including:

- Design for laziness…and SEO

- Have a clear and simple navigation

- Deliver value for users without signing up

- Have a single call to action on each page

- Test extensively with real world users

What I’ve Learned…With Jono Bacon

Recently I was asked to join an interview with OpenChannel where they asked me about a range of topics including building a community narrative, structuring community strategy, gathering community feedback, trends in commercial communities, and more.

Bad Voltage: Wikipedia, On-Boarding, and Resolving Community Issues

I co-founded a podcast called Bad Voltage which covers technology, open source, and other topics.

In the most recent show we touched on an interesting research study into Wikipedia community on-boarding, how it was optimized, and the lack of impact in solving their broader on-boarding issues. In the segment I delve into why this wasn’t particularly surprising to me, and where and how we should focus on these kinds of challenges in communities.

Click play below to listen to the segment:

As usual, I recommend you subscribe to get updates with new posts, content, and recommendations direct to your email.

Innersource: A Guide to the What, Why, and How

In recent years innersource is a term that has cropped up more and more. As with all new things in technology, there has been a healthy mix of interest and suspicion around what exactly innersource is (and what it isn’t).

As a consultant I work with a range of organizations, large and small, across various markets (e.g. financial services, technology etc) to help them bring innersource into their world. So, here is a quick guide to what innersource is, why you might care, and how to get started.

What is Innersource?

In a nutshell, ‘innersource’ refers to bringing the core principles of open source and community collaboration within the walls of an organization. This involves building an internal community, collaborative engineering workflow, and culture.

This work happens entirely within the walls of the company. For all intents and purposes, the company develops an open source culture, but entirely focused on their own intellectual property, technology, and teams. This provides the benefits of open source collaboration, community, and digital transformation, but in a safe environment, particularly for highly regulated industries such as financial services.

Innersource is not a product or service that you buy and install on your network. It is instead a term that refers to the overall workflow, methodology, community, and culture that optimizes an organization for open source style collaboration.

Why do people Innersource?

Many organizations are very command-and-control driven, often as a result of their industry (e.g. highly regulated industries), the size of the organization, or how long they have been around.

Command-and-control driven organizations often hit a bottleneck in efficiency which results in some negative outcomes such as slower Time To Market, additional bureaucracy, staff frustration, reduced innovation, loss of a competitive edge, and additional costs (and waste) for operating the overall business.

An unfortunate side effect of this is that teams get siloed, and this results in reduced collaboration between projects and teams, duplication of effort, poor communication of wider company strategic goals, territorial leadership setting in, and frankly…the organization becomes a less fun and inspiring place to work.

While the benefits of open source have been clearly felt in reducing costs for consuming and building software and services, there has also been substantive value for organizations and staff who work together using an open source methodology. People feel more engaged, are able to grow their technical skills, build more effective relationships, feel their work has more impact and meaning, and experience more engagement in their work.

It is very important to note that innersource is not merely about optimizing how people write code. Sure, workflow is a key component, but innersource is fundamentally cultural in focus. You need both: If you build an environment that (a) has an open and efficient peer-review based workflow. and (b) you build a culture that supports cross-departmental collaboration and internal community, the tangible output is unsurprisingly, not just better code, but better teams, and better products.

What are the BENEFITS of innersource for an organization?

There are number of benefits for organizations that work in an innersource way:

- Faster Time To Market (TTM) – innersource optimizes teams to work faster and more efficiently and this reduces the time it takes to build and release new products and services.

- Better code – a collaborative peer-review process commonly results in better quality code as multiple engineers are reviewing the code for quality, optimization, and elegance.

- Better security – with more eyeballs on code due to increased collaboration, all bugs (and security flaws) are shallow. This means that issues can be identified more quickly, and thus fixed.

- Expanded innovation – you can’t successfully “tell” people to innovate. You have to build an environment that encourages employees to have and share ideas, experiment with prototypes, and collaborate together. Innersource optimizes an organization for this and the result is a permissive environment that facilitates greater innovation.

- Easier hiring – young engineers are growing up in a world where they can cut their teeth on open source projects to build up their experience. Consequently, they don’t want to work in dusty siloed organizations, they want to work in an open source way. Innersource (as well as wider open source participation) not only makes your company more attractive, but it is increasingly a requirement to attract the best talent.

- Improved skills development – with such a focus on collaboration with innersource, staff learn from each other, discover new approaches, and rectify bad habits due to peer review.

- Easier performance/audit/root cause analysis – innersource workflow results in a digital record of your entire collaborative work. This can make tracking performance, audits, and root cause analysis easier. Your organization benefits from a record of how previous work was done which can inform and illustrate future decisions.

- More efficient on-boarding for new staff – when new team members join the company, this record of work I outlined in the previous bullet helps them to see and learn from how previous decisions were made and how previous work was executed. This makes on-boarding, skills development, and learning the culture and personalities of an organization much easier.

- Easier collaboration with the public open source world – while today you may have no public open source contributions to make, if in the future you decide to either contribute to or build a public open source project, innersource will already instill the necessary workflow, process, and skills to work with public open source projects well.

What are the RISKS of innersource for an organization?

While innersource has many benefits, it is not a silver bullet. As I mentioned earlier, innersource is fundamentally about building culture, and a workflow and methodology that provides practical execution and delivery.

Building culture is hard. Here are some of the risks attached:

- It takes time – putting innersource in place takes time. I always recommend organizations to start small and iterate. As such, various people in the organization (e.g. execs and key stakeholders) will need to ensure they have realistic expectations about the delivery of this work.

- It can cause uncertainty – bringing in any new workflow and culture can cause people to feel anxious. It is always important to involve people in the formation and iteration of innersource, communicate extensively, reassure, and always be receptive to feedback

- Purely top-down directives are often not taken seriously – innersource requires both a top-down permissive component from senior staff and bottom-up tangible projects and workflow for people to engage with. If one or the other is missing, there is a risk of failure.

- It varies from organization to organization – while the principles of innersource are often somewhat consistent, every organization’s starting point is different. As such, delivering this work will require a lot of nuance for the specifics of that organization, and you can’t merely replicate what others have done.

How do I use Innersource at my company?

In the interests of keeping this post concise, I am not going to explain here how to build out an innersource program here, but to share some links some other articles I have written for how to get started:

- How to create an internal innersource community

- 10 steps to innersource in your organization

- Building better teams with asynchronous workflow

One thing I would definitely recommend is hiring someone to help you with this work. While not critical, there is a lot of nuance attached to building the right mix of workflow, incentives, messaging, and building institutional knowledge. Obviously, this is something I provide as a consultant (more details), so if you want to discuss this further, just drop me a line.

If you thought this was interesting, you might want to Join As a Member. This will ensure you never miss an article, you get access to exclusive member-only content, early-access to new projects and member-only events, and the possibility of winning free 1-on-1 workshops. It is entirely FREE and I will never sell your information or spam you (those people suck). Find out more here.

Don’t Use Bots to Engage With People on Social Media

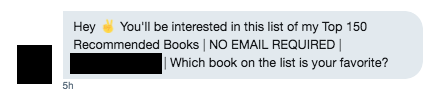

I am going to be honest with you, I am writing this post out of one part frustration and one part guidance to people who I think may be inadvertently making a mistake. I wanted to write this up as a blog post so I can send it to people when I see this happening.

It goes like this: when I follow someone on Twitter, I often get an automated Direct Message which looks something along these lines:

These messages invariably are either trying to (a) get me to look at a product they have created, (b) trying to get me to go to their website, or (c) trying to get me to follow them somewhere else such as LinkedIn.

Unfortunately, there are two similar approaches which I think are also problematic.

Firstly, some people will have an automated tweet go out (publicly) that “thanks” me for following them (as best an automated bot who doesn’t know me can thank me).

Secondly, some people will even go so far as to record a little video that personally welcomes me to their Twitter account. This is usually less than a minute long and again is published as an integrated video in a public tweet.

Why you shouldn’t do this

There are a few reasons why you might want to reconsider this:

Firstly, automated Direct Messages come across as spammy. Sure, I chose to follow you, but if my first interaction with you is advertising, it doesn’t leave a great taste in my mouth. If you are going to DM me, send me a personal message from you, not a bot (or not at all). Definitely don’t try to make that bot seem like a human: much like someone trying to suppress a yawn, we can all see it, and it looks weird.

Secondly, don’t send out the automated thank-you tweets to your public Twitter feed. This is just noise that everyone other than the people you tagged won’t care about. If you generate too much noise, people will stop following you.

Thirdly, in terms of the personal video messages (and in a similar way to the automated public thank-you messages), in addition to the noise it all seems a little…well, desperate. People can sniff desperation a mile off: if someone follows you, be confident in your value to them. Wow them with great content and interesting ideas, not fabricated personal thank-you messages delivered by a bot.

What underlies all of this is that most people want authentic human engagement. While it is perfectly fine to pre-schedule content for publication (e.g. lots of people use Buffer to have a regular drip-feed of content), automating human engagement just doesn’t hit the mark with authenticity. There is an uncanny valley that people can almost always sniff out when you try to make an automated message seem like a personal interaction.

Of course, many of the folks who do these things are perfectly well intentioned and are just trying to optimize their social media presence. Instead of doing the above things, see my 10 recommendations for social media as a starting point, and explore some other ways to engage your audience well and build growth.

Interview with Jeff Atwood on Building Communities

I recently did an interview with Jeff Atwood, co-creator of StackExchange and Discourse about his approach to building platforms, communities. and more.

Read it here.

What is IT culture? Today’s leaders need to know

See my new post for opensource.com about how you build culture in an organization/community:

“Culture” is a pretty ambiguous word. Sure, reams of social science research explore exactly what exactly “culture” is, but to the average Joe and Josephine the word really means something different than it does to academics. In most scenarios, “culture” seems to map more closely to something like “the set of social norms and expectations in a group of people.” By extension, then, an “IT culture” is simply “the set of social norms and expectations pertinent to a group of people working in an IT organization.”

I suspect most people see themselves as somewhat passive contributors to this thing called “culture.” Sure, we know we can all contribute to cultural change, but I don’t think most people actually feel particularly empowered to make this kind of meaningful change. On top of that, we can also observe significant changes in cultural norms that depend on variables like time and geography. An IT company in China, for example, might have a very different culture from a company in the San Francisco area. A startup in Birmingham, England will have a different culture to a similar startup in Berlin, Germany. And so on.

Culture is critical. It’s the lifeblood of an organization, but it’s complicated to understand and shape. The “IT culture” of the 1980s and 1990s differs from “IT culture” today—and it will be different again 10 years from now. Apart from generational changes, cultural norms for IT practitioners have changed, too. Today, digital technology is more social, more accessible to people with fewer technical skills, and more embedded in our consumer-oriented world than ever. We’ve learned to cherish simplicity, elegance, and design, and this has reflected the kinds of organizations that are forming.

Read it here.

Work Smarter: The Cocktail of Simplicity, Manageable Adversity, and Muntzing

Back in the 40s, TVs were giant, ugly behemoths. They were jammed with vacuum tubes, big bulky components, and were prone to overheating and failure.

Earl “madman” Muntz was an engineer and businessman who started repairing radios when he was 8 and built his first car radio when he was 14. As only someone with the nickname ‘madman‘ could do, when he worked in the TV business, he would walk around the factory floor, step in front of an unsuspecting engineer and yank components from TV sets until they stopped working. Then, he would put the last removed component back in and the set would often work, but with fewer components (thus cheaper) and often other benefits such as reduced heat.

This practice became known as muntzing, which while it sounds like some awful way of hazing people with a hosepipe, it was actually a deliciously simple exercise in efficiency and cost reduction. Rather unsurprisingly, this provides an interesting lesson we can apply outside of knackered, old TVs from the forties.

Simple but not Simpler

Muntz’ was fundamentally zoning in on simplicity as a way to accomplish efficiency.

He wasn’t the first. Around the same time period, Einstein, a man not especially unfamiliar with genius, said:

“Everything should be made as simple as possible, but not simpler.”

Einstein touches on the elegance in simplicity and not to be fooled by thinking simple minds create simple things. We see this every day with seemingly simple devices (e.g. the iPhone) that carefully conceal enormous amounts of complexity behind the scenes, both in terms of technology and workflow.

For the work I do in building productive and engaging communities and organizations, this is nirvana. My ultimate goal is to build human systems that deliver solid, productive, and predictable results but are simple in their instrumentation and use. As you can imagine, there is often a lot of complexity that goes into doing this.

So, Muntz and Einstein give us a good recipe: focus on simplicity as a means to accomplish efficiency, and reduce the complexity as a means to become lean. Sounds great in theory, but how do we do this?

Harnessing Adversity to Build Efficiency

There are various reasons why things become inefficient: people get lazy and take shortcuts, complexity slows things down, too many layers of abstraction contribute to this complexity, people accept the new reality of inefficiency and don’t challenge it…the list goes on.

We see this everywhere in the products we build, the organizational methodologies we have in companies and communities, the systems we have to use to file our taxes or invoices, and elsewhere. Not seen this? Go to the DMV in America. You will get it in droves.

An effective way to create efficiency and optimization is when we have manageable adversity. That is, we face tough situations that are within our control, capability, and power to resolve and learn from.

Muhammed Ali said it best:

“I don’t count my situps, I only start counting when it starts hurting, when I feel pain, that’s when I start counting, cause that’s when it really counts.”

Our most difficult moments in life, when we can feel beaten down, tired, and lost, can be the most formidable times of personal growth, evolution, and development. If we therefore instill the right level of adversity into our work, complete with having the ability to resolve it (which is the key difference between adversity being a helpful thing or a discriminatory force), we develop efficiencies.

Putting This Into Practice

As such, there can be enormous value in deliberately injecting adversity into our work as a forcing function to get better results. In other words, sometimes the easiest path forward is not the best path if we want to increase our capability and creativity. Sometimes throwing a few obstacles people need to navigate can be a useful thing.

Here are five recommendations to consider.

1. Add intentional burdens

Baseball players would use two bats, drummers would put additional weights on their ankles, and powerlifters would lift additional weight beyond competition requirements all for the same reason: when you remove the additional burden, your performance improves.

Think about how you can instill an intentional restriction that will force you and your teammates to think creatively around how to solve the problem.

For example, an ex-colleague of mine at Canonical was once facing a very low-level bug in the Linux kernel, before the screen was powered up. As such he saw no error messages to indicate the issue. His solution? He wrote a kernel driver to flash the caps lock light in morse and used a sensor sat on the light to read in the morse to see the issue.

He faced a burden, and that burden generated a creative solution. In a similar way, Steve Jobs famously demanded Burrell Smith accomplish his vision of the first Macintosh with fewer hardware components than he had available. These burdens generated remarkable outcomes.

2. Require an ambitious metric

Create an ambitious metric that a solution is required to accomplish. It is incredible what people will do to accomplish a given metric, be it a score in a video game, a measurement on a device, or a target weight to get into a suit at a wedding (ahem!).

A good example here was the first XPRIZE (I used to work at XPRIZE a few years back). A $10million prize would be awarded to a team who built a reusable spacecraft that could go up into space and back, twice in two weeks.

This ambitious requirement to win the competition made teams think creatively across a wide range of engineering challenges to accomplish the goal. The result: the birth of commercial space travel development.

Think about how you place an ambitious requirement for the outcome of a particular project, and one that really helps the team to focus on accomplishing that goal in a creative, lean, and ambitious way.

3. Iterate and optimize

An approach I use throughout my work is to break work down into smaller pieces to (a) generate data we can use to assess the success or failure of a project, and (b) to use that data to iterate, improve, and test again.

This is one of the most fundamentally important approaches to evolving any kind of product or process: we can’t improve without information and iteration. As one consideration when iterating, always ask the question “how can we do more with less?”. Tiny improvements and efficiencies on a regular cadence will stack up and deliver incredible overall results.

4. Focus on creative solutions

This may seem a little generic, but all too often we constrain our thinking with existing ways of working.

As an example, one company I have worked with wanted to get a complex developer platform online quickly. The engineering team drafted a plan to build a complex infrastructure, complete with APIs, and a difficult to understand process for using it.

The founder responded with “just put a damn web server online and let people upload files”. He was right: as a minimum viable product (for a young, and potentially experimental project), he wanted to focus on shipping something that worked.

As Reed Hoffman, founder of LinkedIn once famously said:

“If You’re Not Embarrassed By The First Version Of Your Product, You’ve Launched Too Late”.

Reed is right. Be like Reed.

5. Build a hackable culture

If there is one thing I have learned about innovation over the years is that you can’t predict where it comes from. One such example is Jack Andraka, who invented a cheaper and more effective pancreatic cancer test when he was at high school.

You can’t instruct someone to be innovative, but you can build a culture that encourages and allows people to innovate. Innovation requires permission to flourish, and to accomplish this, you need to encourage and allow people to produce interesting hacks that do interesting things.

Encourage your teams to explore new ideas, build them, and demo them. Encourage people to hack on and improve products and services as proof of concepts. If you have a permissive environment that encourages people to hack, explore, and be creative, you will get that same kind of ethos when you create production products and services.

This can be nerve-wracking for some companies because it can feel like it encourages people to challenge the norms of the company. It does, and that is a good thing. Part of being a hackable culture is to actively encourage people to call you on your bullshit and propose better, more efficient, and more interesting solutions.

I would LOVE to hear your thoughts here. What do you think of these recommendations? Do you agree or disagree with them? Can they be improved? How else can we build better things? Share your ideas and feedback in the comments below…

The Importance of Validation and Decay In Community Reputation Metrics

A few weeks ago I spoke at DevXCon in San Francisco. I delivered a keynote about measuring the health of a community and how we can tie effective measurements into community reputation, incentives, and other elements. In that talk I touched on the importance of validation and decay and I wanted to take a moment to explain what these concepts are and why they are important.

Firstly, there are two categories of things we can measure in communities:

- Tangible – these are the things we can measure with computers such as messages, pull requests, issues, questions posted to websites, questions answered etc.

- Intangible – these are the analogue things associated with humans that are more complex to measure such as satisfaction, happiness, motivation, respect etc.

Today I want to focus on the tangible (I will cover the intangible side in a future post).

Enter Reputation

When measuring tangible participation in a community (such as organizing events, contributing code, playing a game, or something else), various communities will generate a “reputation” score that reflects the aggregate set of contributions. The way this is presented varies in different communities.

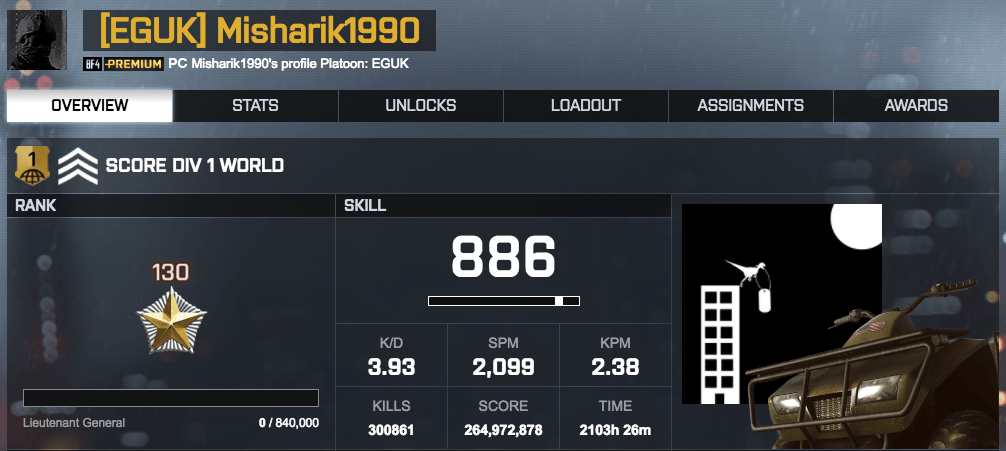

For example, Battlefield 4 awards you various points based upon your skill demonstrated in the game, ultimately rolling up to your score:

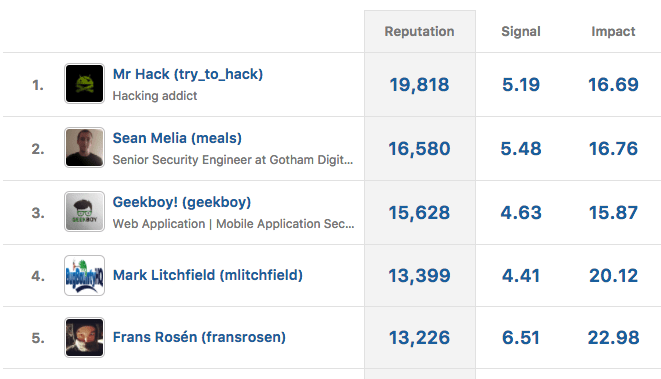

HackerOne (a service for vulnerability report submission and bounties) takes a slightly different approach and actually presents three scores:

Here, reputation covers the total points given for submitted reports, signal covers the average points awarded for a reviewed report, and impact covers points awarded to programs with bounties.

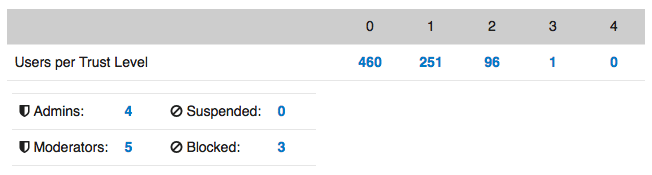

Discourse (a popular forum platform) does this a little differently and breaks users down into trust levels based on how they use the forum (across reading, writing, having content liked and more):

This doesn’t show an individual score for each user but instead shows which users share similar levels of activity and participation.

There are benefits and disadvantages to all of these approaches, but there is clearly value in generating some kind of numerical representation of quality of participation. It can provide a useful way of engaging with community members, providing rewards, opening up additional opportunities, and more.

Activity and Validation

The tricky thing with measuring participation is that you run the risk of people gaming the system.

A classic example of this was some of the older forum platforms. With some (such as phpBB) you could set ranks based upon the number of posts made to the forum. So for example, you may have these labels next to a forum user based on the threshold of posts to the forum:

- < 100 = Newbie

- 100 – 500 = Regular

- 500 – 1500 = Rockstar

- 1500+ = Epic

As you can imagine, some people would deliberately post small and pointless replies to these forums. I recently interviewed Jeff Atwood, who I consider to be one of the brightest minds on building collaborative platforms, and he summed it up well:

Remember, whenever you put a number next to someone’s name, you are now playing with dynamite. People will do whatever they can to make that number go up, even if it makes no sense at all, or has long since stopped being reasonable. Carefully consider all the implications of that number carefully before you put it anywhere, and take a moment to think about what Evil People will do with it, as well.

One way in which I like to think of measuring things is to measure two components: the activity and the validation of that activity. This provides the ability for us to not just count the number of contributions (which is helpful in determining the level of activity and for how long), but also if another member of the community has determined it to be valuable, which can be a good indicator of quality.

We already see this in the HackerOne example above. The reputation score is a good indicator of the level of activity, but some users may “shotgun” low quality reports to get their reputation score. This is where the signal number comes in: it shows the average quality for reports, so the higher number, the better the overall report quality from that hacker.

In the software development world the key example of this is a merged pull request. It is one thing for someone to submit code for review for inclusion in a project, but a merged pull request shows that (a) someone contributed code, (b) it was reviewed by their peers, (c) it was deemed high quality, and (d) it added value to the project and was thus merged.

We can explore all kinds of way of validating contributions and they don’t have to be heavyweight. How many messages have received a like? How many people upvoted a question? How many people showed up to a meetup? Even lightweight ways of determining a simple level of validation can be helpful.

It is essential to track both. If you only track activity, the system can be gamed. If you track validation too, you can more intelligently interpret the data.

Fairness and Decay

Rather unsurprisingly, when you start tracking reputation in some form, there is a temptation to build leaderboards to instill a little competitive spirit for people to see who can get their scores the highest. While this varies from community to community, it works. People love to compete, and it can be a fun way to encourage people to improve their skills or participate more.

A core goal I have when I build communities for organizations is that community strategy should encourage the right kind of behaviors. We want to build systems and processes that get people participating in a positive way.

I like to approach community strategy with the goal of creating significant and sustained participation that builds a sense of belonging. We don’t just want drive-by participation, we want people to stick around for long periods of time, and to do that there is a strong psychological desire for people to build a sense of respect and status in the community. For them to accomplish this, we need to prevent our communities from being a place where the rockstars (who have done great work for many years) can never be unseated from their pedestals.

This is why decay is so important. When you have a reputation score, it is important that the score will gradually decrease with lack of participation over time. This should not be too quick, we need to allow people to take time away, have vacations, have kids, or other time away, and not have their hard earned reputation points evaporate.

It is though important to gradually decrease reputation scores from inactivity for two important reasons though:

- If you don’t, your community will be rigged. Anyone who is newer than being right at the beginning of the community will literally never be able to catch up. It will reward the early participants unfairly.

- Your community will also lack a forcing function to encourage people to be actively participating. The inverse of this forcing function is that people will feel they can coast and still have a very respectful reputation score.

Of course, there are no hard rules in any of this, but I recommend you apply these guidelines when thinking about generating reputation scores. The most important thing to focus on is that your scores are fair, they can’t be gamed in an undesirable way, and they reward people for quality participation.

What do you think? Do you have further recommendations or ideas you want to share? Did I say something you want to challenge? Share it in the comments below…