A few weeks ago I spoke at DevXCon in San Francisco. I delivered a keynote about measuring the health of a community and how we can tie effective measurements into community reputation, incentives, and other elements. In that talk I touched on the importance of validation and decay and I wanted to take a moment to explain what these concepts are and why they are important.

Firstly, there are two categories of things we can measure in communities:

- Tangible – these are the things we can measure with computers such as messages, pull requests, issues, questions posted to websites, questions answered etc.

- Intangible – these are the analogue things associated with humans that are more complex to measure such as satisfaction, happiness, motivation, respect etc.

Today I want to focus on the tangible (I will cover the intangible side in a future post).

Enter Reputation

When measuring tangible participation in a community (such as organizing events, contributing code, playing a game, or something else), various communities will generate a “reputation” score that reflects the aggregate set of contributions. The way this is presented varies in different communities.

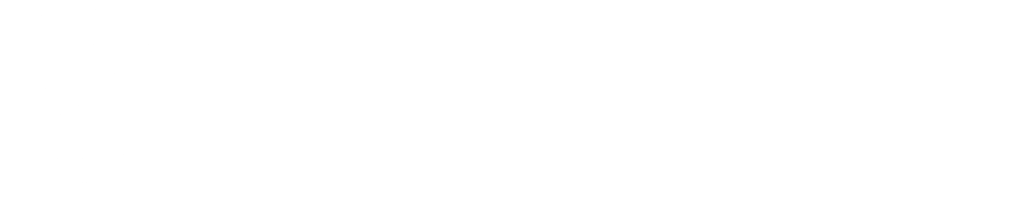

For example, Battlefield 4 awards you various points based upon your skill demonstrated in the game, ultimately rolling up to your score:

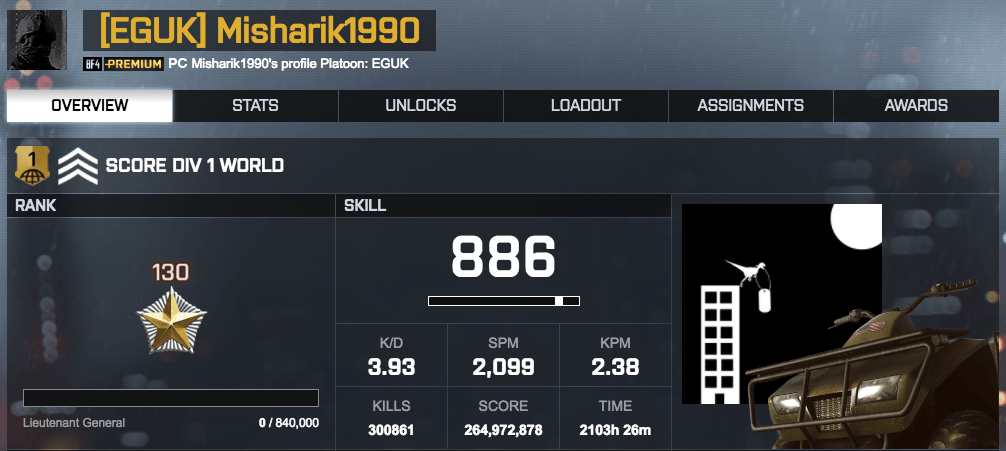

HackerOne (a service for vulnerability report submission and bounties) takes a slightly different approach and actually presents three scores:

Here, reputation covers the total points given for submitted reports, signal covers the average points awarded for a reviewed report, and impact covers points awarded to programs with bounties.

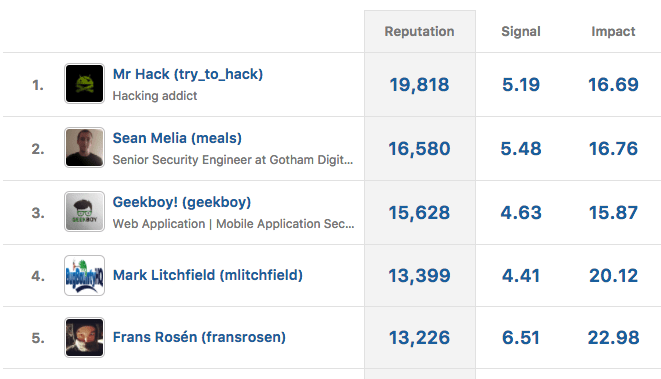

Discourse (a popular forum platform) does this a little differently and breaks users down into trust levels based on how they use the forum (across reading, writing, having content liked and more):

This doesn’t show an individual score for each user but instead shows which users share similar levels of activity and participation.

There are benefits and disadvantages to all of these approaches, but there is clearly value in generating some kind of numerical representation of quality of participation. It can provide a useful way of engaging with community members, providing rewards, opening up additional opportunities, and more.

Activity and Validation

The tricky thing with measuring participation is that you run the risk of people gaming the system.

A classic example of this was some of the older forum platforms. With some (such as phpBB) you could set ranks based upon the number of posts made to the forum. So for example, you may have these labels next to a forum user based on the threshold of posts to the forum:

- < 100 = Newbie

- 100 – 500 = Regular

- 500 – 1500 = Rockstar

- 1500+ = Epic

As you can imagine, some people would deliberately post small and pointless replies to these forums. I recently interviewed Jeff Atwood, who I consider to be one of the brightest minds on building collaborative platforms, and he summed it up well:

Remember, whenever you put a number next to someone’s name, you are now playing with dynamite. People will do whatever they can to make that number go up, even if it makes no sense at all, or has long since stopped being reasonable. Carefully consider all the implications of that number carefully before you put it anywhere, and take a moment to think about what Evil People will do with it, as well.

One way in which I like to think of measuring things is to measure two components: the activity and the validation of that activity. This provides the ability for us to not just count the number of contributions (which is helpful in determining the level of activity and for how long), but also if another member of the community has determined it to be valuable, which can be a good indicator of quality.

We already see this in the HackerOne example above. The reputation score is a good indicator of the level of activity, but some users may “shotgun” low quality reports to get their reputation score. This is where the signal number comes in: it shows the average quality for reports, so the higher number, the better the overall report quality from that hacker.

In the software development world the key example of this is a merged pull request. It is one thing for someone to submit code for review for inclusion in a project, but a merged pull request shows that (a) someone contributed code, (b) it was reviewed by their peers, (c) it was deemed high quality, and (d) it added value to the project and was thus merged.

We can explore all kinds of way of validating contributions and they don’t have to be heavyweight. How many messages have received a like? How many people upvoted a question? How many people showed up to a meetup? Even lightweight ways of determining a simple level of validation can be helpful.

It is essential to track both. If you only track activity, the system can be gamed. If you track validation too, you can more intelligently interpret the data.

Fairness and Decay

Rather unsurprisingly, when you start tracking reputation in some form, there is a temptation to build leaderboards to instill a little competitive spirit for people to see who can get their scores the highest. While this varies from community to community, it works. People love to compete, and it can be a fun way to encourage people to improve their skills or participate more.

A core goal I have when I build communities for organizations is that community strategy should encourage the right kind of behaviors. We want to build systems and processes that get people participating in a positive way.

I like to approach community strategy with the goal of creating significant and sustained participation that builds a sense of belonging. We don’t just want drive-by participation, we want people to stick around for long periods of time, and to do that there is a strong psychological desire for people to build a sense of respect and status in the community. For them to accomplish this, we need to prevent our communities from being a place where the rockstars (who have done great work for many years) can never be unseated from their pedestals.

This is why decay is so important. When you have a reputation score, it is important that the score will gradually decrease with lack of participation over time. This should not be too quick, we need to allow people to take time away, have vacations, have kids, or other time away, and not have their hard earned reputation points evaporate.

It is though important to gradually decrease reputation scores from inactivity for two important reasons though:

- If you don’t, your community will be rigged. Anyone who is newer than being right at the beginning of the community will literally never be able to catch up. It will reward the early participants unfairly.

- Your community will also lack a forcing function to encourage people to be actively participating. The inverse of this forcing function is that people will feel they can coast and still have a very respectful reputation score.

Of course, there are no hard rules in any of this, but I recommend you apply these guidelines when thinking about generating reputation scores. The most important thing to focus on is that your scores are fair, they can’t be gamed in an undesirable way, and they reward people for quality participation.

What do you think? Do you have further recommendations or ideas you want to share? Did I say something you want to challenge? Share it in the comments below…